While working on Trello2iCal, an awesome webapp that schedules Trello cards on your calendar by their due dates, I worked a lot with the iCalendar protocol (or iCal). It was actually the biggest source of bugs and misbehaviors, me taking a very close second. It didn’t help that Apple decided to name their calendar app iCal adding noise to search results, which were scarce to begin with.

iCalendar itself has two version defined by two RFCs: RFC 2445 from 1998 and RFC 5545 from 2009. It’s a text-based protocol, which contains some metadata and a list of “components” that represent events, todos, alarms, and other calendar related structures. Each of these components is a list of “content-lines” which are mostly “key: value” lines. When you put these calendars online and constantly update them, like Trello2iCal does, it’s called a feed (much like an RSS feed). Here is an iCal feed with two events:

BEGIN:VCALENDAR

VERSION:2.0

PRODID:-//sveder.com/trello_to_ical//EN

BEGIN:VEVENT

SUMMARY:Event One

DTSTART;TZID=UTC;VALUE=DATE-TIME:20120805T090000Z

DTEND;TZID=UTC;VALUE=DATE-TIME:20120805T090500Z

DTSTAMP;VALUE=DATE-TIME:20120918T230955

UID:4fe6645de5a5b15c4f51136ftrello_to_ical

DESCRIPTION:Card URL -nsome url

END:VEVENT

BEGIN:VEVENT

SUMMARY:Event Two

DTSTART;TZID=UTC;VALUE=DATE-TIME:20120129T100000Z

DTEND;TZID=UTC;VALUE=DATE-TIME:20120129T100500Z

DTSTAMP;VALUE=DATE-TIME:20120918T230955

UID:4f00df4fcf0b6aef7001bd90trello_to_ical

DESCRIPTION:Card URL -nsome_url

END:VEVENT

END:VCALENDAR

If I was a smartass, I would really question the decision to invent yet another textual protocol instead of using XML which is quite suitable for this. Maybe if the protocol was XML based it would have been easier to implement, thus making it more popular, which would have resulted in a bigger knowledge base and better tools. One tool I do have to point out that saved me a lot of time is the iCal validator (thanks Ben Fortuna!), but it only checks whether your iCal feed is valid according to the spec, not with the real world quirks below.

Below, I talk about the issues and quirks I ran into and their solutions or workaround, in no particular order.

- iCalendar clients, especially Google Calendar, are very bad at refreshing their data. I’ve had reports of users waiting for over 24 hours for their calendars to sync up. There is a workaround but if you’re doing active planning, this is unacceptable. It’s a pretty easy issue to solve for Google Calendar – just add a button that a user can click to refresh, but I guess it’s not a priority. Outlook and Apple Calendar are a bit better, syncing a few times an hour, but AFAIK lacks a refresh option as well. I wish the protocol had a way to tell clients the maximal time between syncs.

- Google Calendar refused to load iCalendar feeds before I put a robots.txt file, allowing Google bot access. I don’t think that makes much sense as this isn’t a bot but a protocol client (which is probably best called an “agent”).

- While developing, I put version 0.1 as my iCalendar version mistaking it for the version of my app. Every iCalendar client accepted my feed except Apple Calendar who silently rejected it. Apparently, the only value they consider valid is version 2.0.

- Apple Calendar was also the only one that didn’t parse the feed without a DTSTAMP field.

- All Day Events – There are many methods that don’t work or are wrong:

- WRONG: Adding one day to a date time object is not right – just make a 24-hour meeting. It doesn’t matter if you start at midnight and end at midnight either.

- WRONG: Having the start date time the same as end doesn’t work either as it produces undefined behavior.

- WRONG: Removing the time component and setting the end day and the start day as the same day – this is an “event on the day”, not a full day event. This should be used for birthdays for example.

The RIGHT way to do it is to remove the time component and set the end day for a day later. If not for the first point, this would have been easier to test, but when refresh is a 5-step process, it becomes tedious.

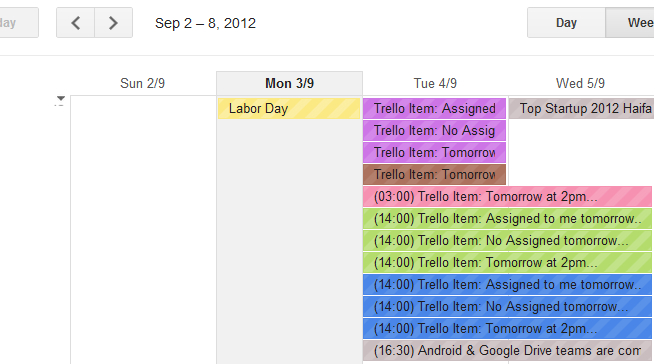

A mess of iCal Calendars and Events.

- New lines in the description – in this case Outlook is the outlier where encoding the line ends as “=0d=0a” (this encoding is probably a relic from Outlook Express in which this was the way to encode ASCII characters in emails) worked. The RIGHT way to do it is using “n”. No, not the ASCII n which is 0x0d, but the actual ASCII slash character “” followed by the letter “n”. Fun.

- I was using the iCalendar python library. It is well maintained but looking at the code, it is pretty gnarly. It also doesn’t support Unicode in a sane way making me modify it in a few places that I’m now retroactively opening bugs for in their github repo.

- A current issue I’m having – both Apple Calendar and Outlook have no problem parsing the unicode I’m serving, but Google Calendar insists to turn them into question marks. I’m pretty sure the problem is somewhere in my HTTP headers, but I still didn’t solve it.

To be honest, I hope I didn’t come off as too critical of the protocol. Passing complex data like calendar events is a very hard problem – you need to take into account time zones, multiple people, multiple fields, recurring events, whole day events, free-busy times, event updates, event deletion, and a myriad of other scenarios. iCalendar is a solution, which is better than none, and a lot of the quirks can be blamed on calendar clients, but I still find this whole environment very unfriendly and mediocre at best.